The Real Cost of AI Systems: What Leaders Need to Know Before Scaling

Most AI strategy conversations start with capability questions. What can the model do? How accurate is it? What problems can it solve?

But there's a more fundamental question that often gets answered too late: What does this actually cost at the scale we're planning to operate?

The Gap Between Theory and Practice

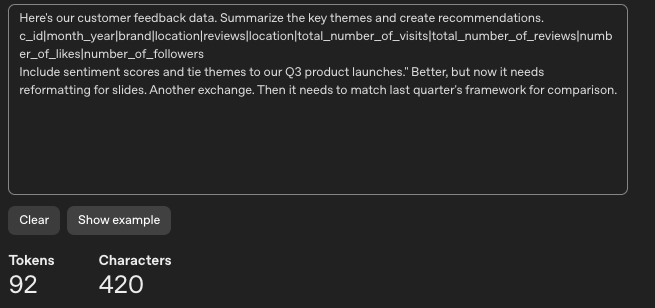

Your marketing team needs to analyze customer feedback from the past quarter and create a summary report. Someone asks GPT to do it: "Here's our customer feedback data. Summarize the key themes and create recommendations." Clean request. 50 tokens using OpenAI Tokenizer Counter.

Tokens are the units of measuring LLM inputs and outputs, roughly equivalent to 3-4 characters or about three-quarters of a word in English.

The first response comes back with general themes and basic structure. Helpful, but it's missing specific metrics. It hasn't connected feedback to the product roadmap. The tone isn't right for executives.

So they clarify. "Include sentiment scores and tie themes to our Q3 product launches." Better, but now it needs reformatting for slides. Another exchange. Then it needs to match last quarter's framework for comparison.

Input Across Multiple Prompts

Output tokens

Final tally: 92 tokens of input across multiple prompts, 3,428 tokens of output.

This pattern isn't unique. Research shows roughly two-thirds of ChatGPT users need 2+ prompts per task, with about a third requiring 5+ exchanges to get usable output. The cost isn't in the initial query, it's in the iteration required to get from "plausible answer" to "working solution."

Running the Numbers

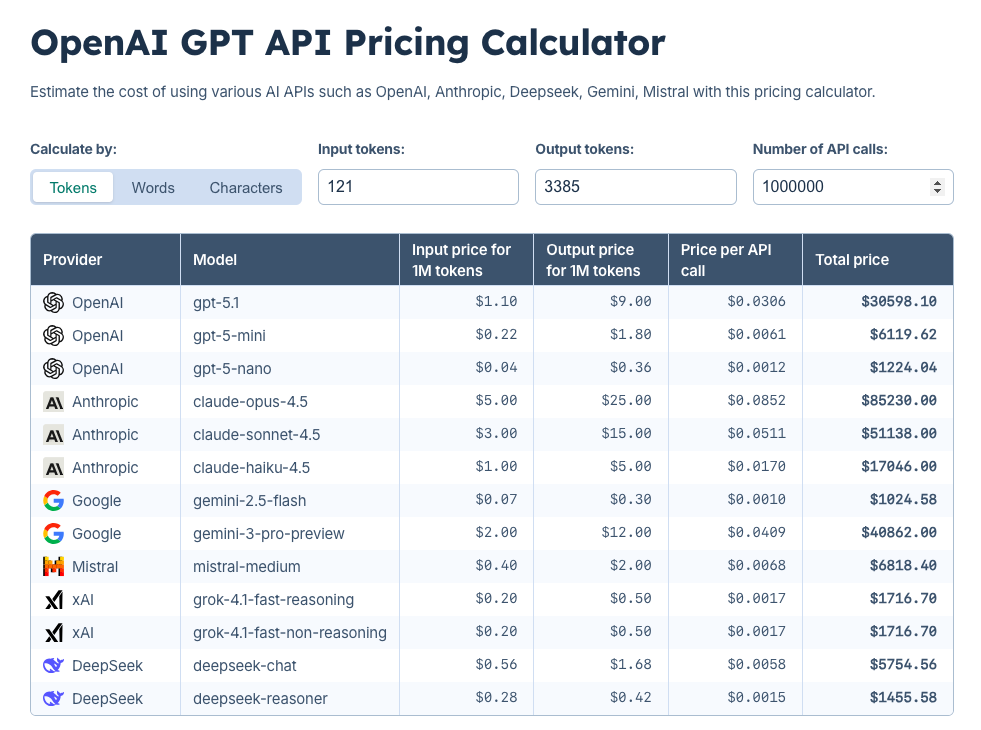

Using the OpenAI GPT API Pricing Calculator:

100 API calls: ~$3

1 million API calls: ~$30,000

That's for GPT-5.1. But for this feedback analysis, the team needed solid insights, not perfect prose or deep statistical analysis. Switching to Gemini-2.5 could drop that million-call cost to ~$1,000.

The strategic question: Are you paying for capability you don't actually need?

The Tradeoff Matrix Leaders Should Map

Accuracy vs. Cost: State-of-the-art models cost significantly more. For customer-facing responses or critical decisions, that premium might be worth it. For internal summaries or draft content, probably not.

Speed vs. Power: Faster models sacrifice some capability. Real-time customer queries need speed. Monthly report generation doesn't.

Context Length vs. Efficiency: Models handling longer inputs cost more per call. Entire quarterly reports as context? You pay for that. Working section-by-section? You save considerably.

Most organizations pick a model tier—usually the flagship—and apply it uniformly. That's leaving money on the table. The strategy should be matching model capability to specific requirements.

Three Planning Questions

What's your realistic usage multiplier?

Don't build your cost model on single exchanges. If your team averages 3-5 prompts per task, multiply your projected costs by that factor. Tools like the OpenAI Tokenizer let you measure actual token usage across real workflows.

Have you mapped requirements to model tiers?

Create a matrix of your use cases and their requirements. Legal contract review might need GPT-4's accuracy. Meeting summaries might work fine with GPT-3.5 or Claude Haiku. Customer feedback analysis could use mid-tier models. The goal is precision matching, not blanket deployment.

What's your cost scaling curve?

Model your expected costs at 10x, 100x, and 1000x your initial volume. If the curve breaks your economics, you need to know that before you scale, not after.

Why This Matters for Strategy

AI systems scale differently than traditional software. With SaaS, you pay per seat regardless of usage intensity. With AI, cost rises directly with usage.

Success means more expense. If your AI implementation works exactly as planned, high engagement, widespread usage, your costs accelerate. Unless you've structured your model strategy correctly, that success becomes unsustainable.

The organizations getting this right aren't necessarily the ones with the biggest AI budgets. They're the ones doing the systematic work upfront: measuring real prompt patterns, testing model tiers against specific requirements, understanding their cost curve before they're locked into it.

Starting Point

Run a pilot with actual tracking.

Use real tasks from your team.

Measure the prompt-to-solution ratio.

Calculate costs across different model tiers using the pricing calculators.

Map your findings to projected scale.

The exercise won't be glamorous. But it's the difference between an AI strategy built on actual economics and one built on capability demos and vendor promises.

How are you approaching AI cost modeling in your organization? Let's discuss.

Reference

Fishkin, Rand. “We Analyzed Millions of CHATGPT User Sessions: Visits Are down 29% since May, Programming Assistance Is 30% of Use.” SparkToro, 30 Aug. 2023, sparktoro.com/blog/we-analyzed-millions-of-chatgpt-user-sessions-visits-are-down-29-since-may-programming-assistance-is-30-of-use/