Chat With Your Documents: Build a RAG AI Agent with No Code in 20 minutes

In my previous tutorials, you built AI agents for web scraping and conversations. Today: teach an AI to answer questions about YOUR documents.

What you're building: A simple chatbot that reads your documents and answers questions with accurate information—no hallucinations.

What you need: N8N account, OpenAI API key, a document, 20 minutes.

Why This Matters

ChatGPT doesn't know your company policies, resume, or product docs. RAG (Retrieval-Augmented Generation) fixes this by letting AI pull from YOUR documents before answering.

Without RAG: "Tell me about my work experience" → Generic advice With RAG: Specific details from your resume about Johns Hopkins, Morgan State, your M.Sc.

This is how companies build internal assistants, support bots, and knowledge bases.

How It Works

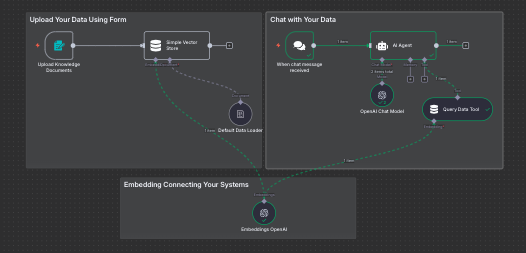

Two workflows that share data:

Workflow 1 - Upload: Document → Breaks into chunks → Converts to vectors → Stores in database

Workflow 2 - Chat: Chat → AI Agent → Chat Model→ Searches database → AI reads chunks

Vectors are coordinates on a mathematical map. Similar meanings = close together. When you ask "What university?", it finds education chunks, not hobby chunks.

Build Part 1: Upload Workflow

Step 1: Create workflow, add Form Trigger

Add form field: Type = File, Name = "document", Accept =

.pdf,.txtForm Title: "Upload Knowledge Document"

Step 2: Add Simple Vector Store (not connected yet)

Mode: Insert Documents

Memory Key:

my-knowledge-base(critical—you'll reuse this)

Step 3: Click Vector Store's "Document Loader" port → Add Default Data Loader

Text Splitter: Recursive Character Text Splitter

Chunk Size: 1000, Overlap: 200

Step 4: Click Vector Store's "Embedding" port → Add Embeddings OpenAI

Model: text-embedding-3-small

Add your API key

Step 5: Execute workflow, upload test document, verify chunks created.

In my case, I have created .txt file with the content of my resume to be used for testing

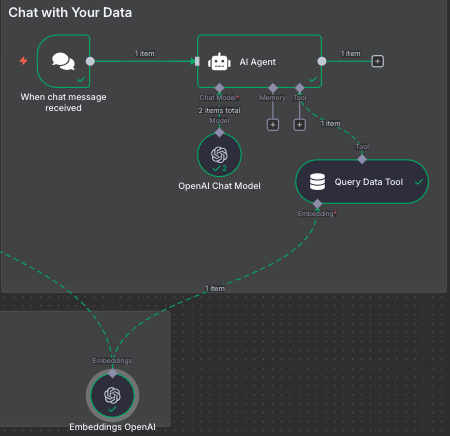

Build Part 2: Chat Workflow

Step 1: New workflow, add When chat message received

Public Chat: ON

Initial Message: "Ask me anything about uploaded documents"

Step 2: Add AI Agent, connect to Chat Trigger

Prompt:

You answer questions using only the knowledge base.

If no relevant info found, say "I don't have that information."

Be specific. Cite details.

Step 3: Click Agent's "Model" port → Add OpenAI Chat Model

Model: gpt-4o-mini

Temperature: 0.2 (factual, not creative)

Step 4: Click Agent's "Tools" port → Add Simple Vector Store

Mode: Retrieve Documents (as Tool)

Memory Key:

my-knowledge-base(MUST MATCH upload workflow)Description: "Search knowledge base for facts, dates, names, details"

Limit: 4

Step 5: Click this Vector Store's "Embedding" port → Add Embeddings OpenAI

Model: text-embedding-3-small (MUST MATCH upload workflow)

Step 6: Execute, get chat URL, ask questions about your document.

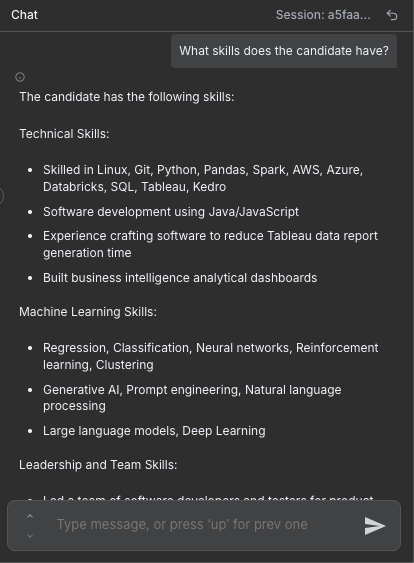

Real Example

I uploaded my resume. Asked: "What university did the candidate go to?"

Response: "Johns Hopkins University (M.Sc. Engineering Management, May 2016) and Morgan State University (B.Sc. Electrical Engineering, May 2013)."

No hallucination. Just facts from my document.

Common Issues

"I don't have that information" but it's clearly there:

Both workflows must use identical Memory Key

Increase Limit to 6 chunks

Decrease chunk size to 500-700

AI uses general knowledge instead of documents:

Add to prompt: "NEVER use training data. ONLY use knowledge base tool."

Production Upgrades

Simple Vector Store works for learning but data clears on restart.

For production:

Pinecone/Qdrant: Persistent storage

Authentication: Add password/API key protection

Metadata: Tag documents by department/date for filtering

Cost monitoring: Track OpenAI API usage

What You Built

Document → Chunks → Vectors → Storage → Search → Answer

This architecture powers:

HR bots ("What's our vacation policy?")

Support bots ("How do I reset password?")

Research assistants (50-paper summaries)

Documentation bots ("How do I use this API?")

Subscribe for the next tutorial. Tag me on LinkedIn if you build something.

The insight: Companies winning with AI use better systems, not better models. You just built one.

Resources: N8N RAG Docs | RAG Template